This is the full developer documentation for KARMA OpenMedEvalKit

# KARMA-OpenMedEvalKit

> Knowledge Assessment and Reasoning for Medical Applications - An evaluation framework for medical AI models.

## Why KARMA?

[Section titled “Why KARMA?”](#why-karma)

KARMA is designed for researchers, developers, and healthcare organizations who need reliable evaluation of medical AI systems.

Extensible

Bring your own model, dataset or even metric. Integrated with Huggingface and also supports local evaluation.

[Add your own →](/user-guide/add-your-own/add-model/)

Fast & Efficient

Process thousands of medical examples efficiently with intelligent caching and batch processing.

[See caching →](/caching)

Multi-Modal Ready

Support for text, images, and audio evaluation across multiple datasets.

[See available datasets →](/user-guide/datasets/datasets_overview)

Model Agnostic

Works with any model - Qwen, MedGemma, Bedrock-SDK, OpenAI-SDK or your custom architecture with unified interface.

[See available models →](/user-guide/models/built-in-models/)

## Quick Start

[Section titled “Quick Start”](#quick-start)

Get started with KARMA in minutes:

```bash

# Install KARMA

pip install karma-medeval

# Run your first evaluation

karma eval --model "Qwen/Qwen3-0.6B" --datasets openlifescienceai/pubmedqa --max-samples 3

```

## Example Output

[Section titled “Example Output”](#example-output)

```bash

$ karma eval --model "Qwen/Qwen3-0.6B" --datasets openlifescienceai/pubmedqa --max-samples 3

{

"openlifescienceai/pubmedqa": {

"metrics": {

"exact_match": {

"score": 0.3333333333333333,

"evaluation_time": 0.9702351093292236,

"num_samples": 3

}

},

"task_type": "mcqa",

"status": "completed",

"dataset_args": {},

"evaluation_time": 7.378399848937988

},

"_summary": {

"model": "Qwen/Qwen3-0.6B",

"model_path": "Qwen/Qwen3-0.6B",

"total_datasets": 1,

"successful_datasets": 1,

"total_evaluation_time": 7.380354166030884,

"timestamp": "2025-07-22 18:43:07"

}

}

```

## Key Features

[Section titled “Key Features”](#key-features)

* **Registry-Based Architecture**: Auto-discovery of models, datasets, and metrics

* **Smart Caching**: DuckDB and DynamoDB backends for faster re-evaluations

* **Extensible Design**: Easy integration of custom models, datasets, and metrics

* **Rich CLI**: Beautiful progress bars, formatted outputs, and help

* **Standards-Based**: Built on PyTorch and HuggingFace Transformers

## Getting Started

[Section titled “Getting Started”](#getting-started)

Installation

Multiple installation methods with uv, pip, or development setup.

[Install KARMA →](/user-guide/installation/)

Basic Usage

Learn the CLI commands and start evaluating your first model.

[Learn CLI →](/user-guide/cli-basics/)

Add Your Own

Extend KARMA with custom models, datasets, and evaluation metrics.

[Customize →](/user-guide/add-your-own/add-model/)

Supported Resources

Complete list of available models, datasets, and metrics.

[View Resources →](/supported-resources/)

## Release resources

[Section titled “Release resources”](#release-resources)

[KARMA release blog ](http://info.eka.care/services/introducing-karma-openmedevalkit-an-open-source-framework-for-medical-ai-evaluation)Read about KARMA

[4 novel healthcare datasets ](< http://info.eka.care/services/advancing-healthcare-ai-evaluation-in-india-ekacare-releases-four-evaluation-datasets>)Read about the datasets released along with KARMA

[Beyond WER - SemWER ](http://info.eka.care/services/beyond-traditional-wer-the-critical-need-for-semantic-wer-in-asr-for-indian-healthcare)Read about the two new metrics introduced in KARMA for ASR

Ready to evaluate your medical AI models? [Get started with installation →](/user-guide/installation/)

# Core Components of KARMA

This document defines the four core components of KARMA’s evaluation system and how they interact with each other.

1. Models

2. Datasets

3. Metrics

4. Processors

## Data Flow Sequence

[Section titled “Data Flow Sequence”](#data-flow-sequence)

```

sequenceDiagram

participant CLI

participant Orchestrator

participant Registry

participant Model

participant Dataset

participant Processor

participant Metrics

participant Cache

CLI->>Orchestrator: karma eval model --datasets ds1

Orchestrator->>Registry: discover_all_registries()

Registry-->>Orchestrator: components metadata

Orchestrator->>Model: initialize with config

Orchestrator->>Dataset: initialize with args

Orchestrator->>Processor: initialize processors

loop For each dataset

Orchestrator->>Dataset: create dataset instance

Dataset->>Processor: apply postprocessors

loop For each batch

Dataset->>Model: provide samples

Model->>Cache: check cache

alt Cache miss

Model->>Model: run inference

Model->>Cache: store results

end

Model-->>Dataset: return predictions

Dataset->>Dataset: extract_prediction()

Dataset->>Processor: postprocess predictions

Processor-->>Dataset: processed text

Dataset->>Metrics: evaluate(predictions, references)

Metrics-->>Dataset: scores

end

Dataset-->>Orchestrator: evaluation results

end

Orchestrator-->>CLI: aggregated results

```

## Component Interaction Diagram

[Section titled “Component Interaction Diagram”](#component-interaction-diagram)

```

graph TD

%% CLI Layer

CLI[CLI Command

karma eval model --datasets ds1,ds2]

%% Orchestrator Layer

ORCH[Orchestrator

MultiDatasetOrchestrator]

%% Registry System

MR[Model Registry]

DR[Dataset Registry]

MetR[Metrics Registry]

PR[Processor Registry]

%% Core Components

MODEL[Model

BaseModel]

DATASET[Dataset

BaseMultimodalDataset]

METRICS[Metrics

BaseMetric]

PROC[Processors

BaseProcessor]

%% Benchmark

BENCH[Benchmark

Evaluation Engine]

%% Cache System

CACHE[Cache Manager

DuckDB/DynamoDB]

%% Data Flow

CLI --> |parse args| ORCH

ORCH --> |discover| MR

ORCH --> |discover| DR

ORCH --> |discover| MetR

ORCH --> |discover| PR

MR --> |create| MODEL

DR --> |create| DATASET

MetR --> |create| METRICS

PR --> |create| PROC

ORCH --> |orchestrate| BENCH

BENCH --> |inference| MODEL

BENCH --> |iterate| DATASET

BENCH --> |compute| METRICS

BENCH --> |cache lookup/store| CACHE

DATASET --> |postprocess| PROC

DATASET --> |extract predictions| MODEL

MODEL --> |predictions| DATASET

DATASET --> |processed data| METRICS

PROC --> |normalized text| METRICS

%% Configuration Flow

CLI -.-> |--model-args| MODEL

CLI -.-> |--dataset-args| DATASET

CLI -.-> |--metric-args| METRICS

CLI -.-> |--processor-args| PROC

%% Styling

classDef cli fill:#e1f5fe

classDef orchestrator fill:#f3e5f5

classDef registry fill:#fff3e0

classDef component fill:#e8f5e8

classDef benchmark fill:#fff8e1

classDef cache fill:#fce4ec

class CLI cli

class ORCH orchestrator

class MR,DR,MetR,PR registry

class MODEL,DATASET,METRICS,PROC component

class BENCH benchmark

class CACHE cache

```

This architecture ensures clean separation of concerns while enabling flexible configuration and robust error handling throughout the evaluation process.

# Sanity benchmark

To ensure that we have implemented the datasets loading, model invocation and metric calculation correctly, we have invoked the model and have reproduced numbers.

## MedGemma-4B Reproduction

[Section titled “MedGemma-4B Reproduction”](#medgemma-4b-reproduction)

In case of Medgemma, we have been able to reproduce the results for most datasets as claimed in their technical report and huggingface readme page.

# Use KARMA with an LLM

Navigate to [llms-full.txt](https://karma.eka.care/llms-full.txt), copy the documentation from there and paste into your LLM and ask questions.

The llms.txt file has been generated based on these docs and found it to work reliably with claude.

# Caching

KARMA saves the model’s predictions locally to avoid redundant computations. This ensures that running multiple metrics or extending datasets is trivial.

## How are items cached?

[Section titled “How are items cached?”](#how-are-items-cached)

KARMA caches at a sample level for each evaluated model + configuration and dataset combinations. For example, if we run evalution on pubmedqa with the Qwen3-0.6B model, we will cache for each of the configurations. So if temperature is changed and evalution is run once again, then model will be invoked again. However, if only a new metric has been added along with exact\_match on the dataset, then the cached model outputs are reused.

Caching is hugely beneficial for ASR related models as well since the metric computation also evolves over time. For example, if we run evaluation on a dataset with a new metric, the cached model outputs are reused.

## DuckDB Caching

[Section titled “DuckDB Caching”](#duckdb-caching)

DuckDB is a lightweight, in-memory, columnar database that is used by KARMA to cache the model’s predictions. This the default way of caching.

## DynamoDB Caching

[Section titled “DynamoDB Caching”](#dynamodb-caching)

DynamoDB is a NoSQL database service provided by Amazon Web Services (AWS). KARMA can also use DynamoDB to cache model predictions. This is useful for large-scale deployments where the model predictions need to be stored in a highly scalable and durable manner.

To use DynamoDB caching, you need to configure the following environment variables:

* `AWS_ACCESS_KEY_ID`: Your AWS access key ID.

* `AWS_SECRET_ACCESS_KEY`: Your AWS secret access key.

* `AWS_REGION`: The AWS region where your DynamoDB table is located.

Once you have configured these environment variables, you can enable DynamoDB caching by setting the `KARMA_CACHE_TYPE` environment variable to `dynamodb`.

# karma eval

> Complete reference for the karma eval command

The `karma eval` command is the core of KARMA, used to evaluate models on healthcare datasets.

## Usage

[Section titled “Usage”](#usage)

```bash

karma eval [OPTIONS]

```

## Description

[Section titled “Description”](#description)

Evaluate a model on healthcare datasets. This command evaluates a specified model across one or more healthcare datasets, with support for dataset-specific arguments and rich output.

## Required Options

[Section titled “Required Options”](#required-options)

| Option | Description |

| -------------- | ------------------------------------------------------------------------------------------- |

| `--model TEXT` | Model name from registry (e.g., ‘Qwen/Qwen3-0.6B’, ‘google/medgemma-4b-it’) **\[required]** |

## Optional Arguments

[Section titled “Optional Arguments”](#optional-arguments)

| Option | Type | Default | Description |

| ---------------------------- | --------------- | ------------ | ----------------------------------------------------------------------------------------------- |

| `--model-path TEXT` | TEXT | - | Model path (local path or HuggingFace model ID). If not provided, uses path from model metadata |

| `--datasets TEXT` | TEXT | all | Comma-separated dataset names (default: evaluate on all datasets) |

| `--dataset-args TEXT` | TEXT | - | Dataset arguments in format ‘dataset:key=val,key2=val2;dataset2:key=val’ |

| `--processor-args TEXT` | TEXT | - | Processor arguments in format ‘dataset.processor:key=val,key2=val2;dataset2.processor:key=val’ |

| `--metric-args TEXT` | TEXT | - | Metric arguments in format ‘metric\_name:key=val,key2=val2;metric2:key=val’ |

| `--batch-size INTEGER` | 1-128 | 8 | Batch size for evaluation |

| `--cache / --no-cache` | FLAG | enabled | Enable or disable caching for evaluation |

| `--output TEXT` | TEXT | results.json | Output file path |

| `--format` | table\|json | table | Results display format |

| `--save-format` | json\|yaml\|csv | json | Results save format |

| `--progress / --no-progress` | FLAG | enabled | Show progress bars during evaluation |

| `--interactive` | FLAG | false | Interactively prompt for missing dataset, processor, and metric arguments |

| `--dry-run` | FLAG | false | Validate arguments and show what would be evaluated without running |

| `--model-config TEXT` | TEXT | - | Path to model configuration file (JSON/YAML) with model-specific parameters |

| `--model-args TEXT` | TEXT | - | Model parameter overrides as JSON string (e.g., ’{“temperature”: 0.7, “top\_p”: 0.9}‘) |

| `--max-samples TEXT` | TEXT | - | Maximum number of samples to use for evaluation (helpful for testing) |

| `--verbose` | FLAG | false | Enable verbose output |

| `--refresh-cache` | FLAG | false | Skip cache lookup and force regeneration of all results |

## Examples

[Section titled “Examples”](#examples)

### Basic Evaluation

[Section titled “Basic Evaluation”](#basic-evaluation)

```bash

karma eval --model "Qwen/Qwen3-0.6B" --datasets "openlifescienceai/pubmedqa"

```

### Multiple Datasets

[Section titled “Multiple Datasets”](#multiple-datasets)

```bash

karma eval --model "Qwen/Qwen3-0.6B" --datasets "openlifescienceai/pubmedqa,openlifescienceai/medmcqa"

```

### With Dataset Arguments

[Section titled “With Dataset Arguments”](#with-dataset-arguments)

```bash

karma eval --model "ai4bharat/indic-conformer-600m-multilingual" \

--datasets "ai4bharat/IN22-Conv" \

--dataset-args "ai4bharat/IN22-Conv:source_language=en,target_language=hi"

```

### With Processor Arguments

[Section titled “With Processor Arguments”](#with-processor-arguments)

```bash

karma eval --model "ai4bharat/indic-conformer-600m-multilingual" \

--datasets "ai4bharat/IN22-Conv" \

--processor-args "ai4bharat/IN22-Conv.devnagari_transliterator:source_script=en,target_script=hi"

```

### With Metric Arguments

[Section titled “With Metric Arguments”](#with-metric-arguments)

```bash

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets "Tonic/Health-Bench-Eval-OSS-2025-07" \

--metric-args "rubric_evaluation:provider_to_use=openai,model_id=gpt-4o-mini,batch_size=5"

```

### With Model Configuration File

[Section titled “With Model Configuration File”](#with-model-configuration-file)

```bash

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets "openlifescienceai/pubmedqa" \

--model-config "config/qwen_medical.json"

```

### With Model Parameter Overrides

[Section titled “With Model Parameter Overrides”](#with-model-parameter-overrides)

```bash

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets "openlifescienceai/pubmedqa" \

--model-args '{"temperature": 0.3, "max_tokens": 1024, "enable_thinking": true}'

```

### Testing with Limited Samples

[Section titled “Testing with Limited Samples”](#testing-with-limited-samples)

```bash

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets "openlifescienceai/pubmedqa" \

--max-samples 10 --verbose

```

### Interactive Mode

[Section titled “Interactive Mode”](#interactive-mode)

```bash

karma eval --model "Qwen/Qwen3-0.6B" --interactive

```

### Dry Run Validation

[Section titled “Dry Run Validation”](#dry-run-validation)

```bash

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets "openlifescienceai/pubmedqa" \

--dry-run --model-args '{"temperature": 0.5}'

```

### Force Cache Refresh

[Section titled “Force Cache Refresh”](#force-cache-refresh)

```bash

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets "openlifescienceai/pubmedqa" \

--refresh-cache

```

## Configuration Priority

[Section titled “Configuration Priority”](#configuration-priority)

Model parameters are applied in the following priority order (highest to lowest):

1. **CLI `--model-args`** - Highest priority

2. **Config file (`--model-config`)** - Overrides metadata defaults

3. **Model metadata defaults** - From registry

4. **CLI `--model-path`** - Sets model path if metadata doesn’t provide one

## Configuration File Formats

[Section titled “Configuration File Formats”](#configuration-file-formats)

### JSON Format

[Section titled “JSON Format”](#json-format)

```json

{

"temperature": 0.7,

"max_tokens": 2048,

"top_p": 0.9,

"enable_thinking": true

}

```

### YAML Format

[Section titled “YAML Format”](#yaml-format)

```yaml

temperature: 0.7

max_tokens: 2048

top_p: 0.9

enable_thinking: true

```

## Common Issues

[Section titled “Common Issues”](#common-issues)

### Model Not Found

[Section titled “Model Not Found”](#model-not-found)

```bash

karma list models

```

### Dataset Not Found

[Section titled “Dataset Not Found”](#dataset-not-found)

```bash

karma list datasets

```

### Invalid JSON in model-args

[Section titled “Invalid JSON in model-args”](#invalid-json-in-model-args)

```bash

# Wrong

--model-args '{temperature: 0.7}'

# Correct

--model-args '{"temperature": 0.7}'

```

## See Also

[Section titled “See Also”](#see-also)

* [Running Evaluations Guide](../user-guide/running-evaluations.md)

* [Model Configuration](../user-guide/models/model-configuration.md)

* [CLI Basics](../user-guide/cli-basics.md)

# karma info

> Complete reference for the karma info commands

The `karma info` command group provides detailed information about models, datasets, and system status.

## Usage

[Section titled “Usage”](#usage)

```bash

karma info [COMMAND] [OPTIONS] [ARGUMENTS]

```

## Subcommands

[Section titled “Subcommands”](#subcommands)

* `karma info model ` - Get detailed information about a specific model

* `karma info dataset ` - Get detailed information about a specific dataset

* `karma info system` - Get system information and status

***

## karma info model

[Section titled “karma info model”](#karma-info-model)

Get detailed information about a specific model including its class details, module location, and implementation info.

### Usage

[Section titled “Usage”](#usage-1)

```bash

karma info model MODEL_NAME [OPTIONS]

```

### Arguments

[Section titled “Arguments”](#arguments)

| Argument | Description |

| ------------ | ---------------------------------------------------------- |

| `MODEL_NAME` | Name of the model to get information about **\[required]** |

### Options

[Section titled “Options”](#options)

| Option | Type | Default | Description |

| ------------- | ---- | ------- | --------------------------------------------- |

| `--show-code` | FLAG | false | Show model class code location and basic info |

### Examples

[Section titled “Examples”](#examples)

```bash

# Basic model information

karma info model "Qwen/Qwen3-0.6B"

# Show code location details

karma info model "google/medgemma-4b-it" --show-code

# Check model that might not exist

karma info model "unknown-model"

```

### Output

[Section titled “Output”](#output)

```bash

$ karma info model "Qwen/Qwen3-0.6B" --show-code

╭────────────────────────────────────────────────────────────────────╮

│ KARMA: Knowledge Assessment and Reasoning for Medical Applications │

╰────────────────────────────────────────────────────────────────────╯

Model Information: Qwen/Qwen3-0.6B

──────────────────────────────────────────────────

Model: Qwen/Qwen3-0.6B

Name Qwen/Qwen3-0.6B

Class QwenThinkingLLM

Module karma.models.qwen

Description:

╭──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮

│ Qwen language model with specialized thinking capabilities. │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

Code Location:

File location not available

Constructor Signature:

QwenThinkingLLM(self, model_name_or_path: str, device: str = 'mps', max_tokens: int = 32768, temperature: float = 0.7, top_p: float = 0.9, top_k: Optional = None,

enable_thinking: bool = False, **kwargs)

Usage Examples:

Basic evaluation:

karma eval --model "Qwen/Qwen3-0.6B" --datasets openlifescienceai/pubmedqa

With multiple datasets:

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets openlifescienceai/pubmedqa,openlifescienceai/mmlu_professional_medicine

With custom arguments:

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets openlifescienceai/pubmedqa \

--max-samples 100 --batch-size 4

Interactive mode:

karma eval --model "Qwen/Qwen3-0.6B" --interactive

✓ Model information retrieved successfully

```

***

## karma info dataset

[Section titled “karma info dataset”](#karma-info-dataset)

Get detailed information about a specific dataset including its requirements, supported metrics, and usage examples.

### Usage

[Section titled “Usage”](#usage-2)

```bash

karma info dataset DATASET_NAME [OPTIONS]

```

### Arguments

[Section titled “Arguments”](#arguments-1)

| Argument | Description |

| -------------- | ------------------------------------------------------------ |

| `DATASET_NAME` | Name of the dataset to get information about **\[required]** |

### Options

[Section titled “Options”](#options-1)

| Option | Type | Default | Description |

| ----------------- | ---- | ------- | ---------------------------------- |

| `--show-examples` | FLAG | false | Show usage examples with arguments |

| `--show-code` | FLAG | false | Show dataset class code location |

### Examples

[Section titled “Examples”](#examples-1)

```bash

# Basic dataset information

karma info dataset openlifescienceai/pubmedqa

# Show usage examples

karma info dataset "ai4bharat/IN22-Conv" --show-examples

# Show code location

karma info dataset "mdwiratathya/SLAKE-vqa-english" --show-code

# Get info for dataset with required args

karma info dataset "ekacare/MedMCQA-Indic" --show-examples

```

### Output

[Section titled “Output”](#output-1)

```bash

karma info dataset "ai4bharat/IN22-Conv" --show-examples

╭────────────────────────────────────────────────────────────────────╮

│ KARMA: Knowledge Assessment and Reasoning for Medical Applications │

╰────────────────────────────────────────────────────────────────────╯

[13:13:57] INFO Imported model module: karma.models.aws_bedrock model_registry.py:235

INFO Imported model module: karma.models.aws_transcribe_asr model_registry.py:235

[13:13:58] INFO Imported model module: karma.models.base_hf_llm model_registry.py:235

INFO Imported model module: karma.models.docassist_chat model_registry.py:235

INFO Imported model module: karma.models.eleven_labs model_registry.py:235

[13:13:59] INFO Imported model module: karma.models.gemini_asr model_registry.py:235

INFO Imported model module: karma.models.indic_conformer model_registry.py:235

INFO Imported model module: karma.models.medgemma model_registry.py:235

INFO Imported model module: karma.models.openai_asr model_registry.py:235

INFO Imported model module: karma.models.openai_llm model_registry.py:235

INFO Imported model module: karma.models.qwen model_registry.py:235

INFO Imported model module: karma.models.whisper model_registry.py:235

INFO Registry discovery completed: 4/4 successful, 1 cache hits, total time: 1.36s registry_manager.py:70

Dataset Information: ai4bharat/IN22-Conv

──────────────────────────────────────────────────

Dataset: ai4bharat/IN22-Conv

Name ai4bharat/IN22-Conv

Class IN22ConvDataset

Module karma.eval_datasets.in22conv_dataset

Task Type translation

Metrics bleu

Processors devnagari_transliterator

Required Args source_language, target_language

Optional Args domain, processors, confinement_instructions

Default Args source_language=en, domain=conversational

Description:

╭──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮

│ IN22Conv PyTorch Dataset implementing the new multimodal interface. │

│ Translates from English to specified Indian language. │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

Usage Examples:

With required arguments:

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets ai4bharat/IN22-Conv \

--dataset-args "ai4bharat/IN22-Conv:source_language=en,target_language=hi"

With optional arguments:

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets ai4bharat/IN22-Conv \

--dataset-args "ai4bharat/IN22-Conv:source_language=en,target_language=hi,domain=conversational,processors=,confinement_instructions="

Interactive mode (prompts for arguments):

karma eval --model "Qwen/Qwen3-0.6B" \

--datasets ai4bharat/IN22-Conv --interactive

✓ Dataset information retrieved successfully

```

## karma info system

[Section titled “karma info system”](#karma-info-system)

Get system information and status including available resources, cache status, and environment details.

### Usage

[Section titled “Usage”](#usage-3)

```bash

karma info system [OPTIONS]

```

### Options

[Section titled “Options”](#options-2)

| Option | Type | Default | Description |

| ------------------- | ---- | ---------- | ------------------------------- |

| `--cache-path TEXT` | TEXT | ./cache.db | Path to cache database to check |

### Examples

[Section titled “Examples”](#examples-2)

```bash

# Basic system information

karma info system

# Check specific cache location

karma info system --cache-path /path/to/cache.db

# Check system status

karma info system --cache-path ~/.karma/cache.db

```

### Output

[Section titled “Output”](#output-2)

```bash

karma info system

╭────────────────────────────────────────────────────────────────────╮

│ KARMA: Knowledge Assessment and Reasoning for Medical Applications │

╰────────────────────────────────────────────────────────────────────╯

Discovering system resources...

[13:14:43] INFO Imported model module: karma.models.aws_bedrock model_registry.py:235

INFO Imported model module: karma.models.aws_transcribe_asr model_registry.py:235

INFO Imported model module: karma.models.base_hf_llm model_registry.py:235

INFO Imported model module: karma.models.docassist_chat model_registry.py:235

INFO Imported model module: karma.models.eleven_labs model_registry.py:235

[13:14:44] INFO Imported model module: karma.models.gemini_asr model_registry.py:235

INFO Imported model module: karma.models.indic_conformer model_registry.py:235

INFO Imported model module: karma.models.medgemma model_registry.py:235

INFO Imported model module: karma.models.openai_asr model_registry.py:235

INFO Imported model module: karma.models.openai_llm model_registry.py:235

INFO Imported model module: karma.models.qwen model_registry.py:235

INFO Imported model module: karma.models.whisper model_registry.py:235

INFO Registry discovery completed: 4/4 successful, 1 cache hits, total time: 1.24s registry_manager.py:70

System Information

──────────────────────────────────────────────────

System Information

Available Models 21

Available Datasets 21

Cache Database ✓ Available (5.0 MB)

Cache Path cache.db

Environment:

Python: 3.10.15

Platform: macOS-15.5-arm64-arm-64bit

Architecture: arm64

Karma CLI: development

Dependencies:

✓ PyTorch: 2.7.1

✓ Transformers: 4.53.0

✓ HuggingFace Datasets: 3.6.0

✓ Rich: unknown

✓ Click: 8.2.1

✓ Weave: 0.51.54

✓ DuckDB: 1.3.1

Usage Examples:

List available resources:

karma list models

karma list datasets

Get detailed information:

karma info model "Qwen/Qwen3-0.6B"

karma info dataset openlifescienceai/pubmedqa

Run evaluation:

karma eval --model "Qwen/Qwen3-0.6B" --datasets openlifescienceai/pubmedqa

Check cache status:

karma info system --cache-path ./cache.db

✓ System information retrieved successfully

```

## Common Usage Patterns

[Section titled “Common Usage Patterns”](#common-usage-patterns)

### Model Discovery and Validation

[Section titled “Model Discovery and Validation”](#model-discovery-and-validation)

```bash

# 1. List available models

karma list models

# 2. Get detailed info about a specific model

karma info model "Qwen/Qwen3-0.6B"

# 3. Check model implementation

karma info model "Qwen/Qwen3-0.6B" --show-code

```

### Dataset Analysis

[Section titled “Dataset Analysis”](#dataset-analysis)

```bash

# 1. Find datasets for a task

karma list datasets --task-type mcqa

# 2. Get detailed dataset info

karma info dataset "openlifescienceai/medmcqa"

# 3. See usage examples with arguments

karma info dataset "ai4bharat/IN22-Conv" --show-examples

```

### System Debugging

[Section titled “System Debugging”](#system-debugging)

```bash

# Check overall system status

karma info system

# Verify dependencies

karma info system --cache-path ~/.karma/cache.db

# Check cache status

karma info system --cache-path ./evaluation_cache.db

```

### Development Workflow

[Section titled “Development Workflow”](#development-workflow)

```bash

# Quick resource check

karma info model "new-model-name"

karma info dataset "new-dataset-name" --show-code

# System health check

karma info system

```

## Error Handling

[Section titled “Error Handling”](#error-handling)

### Model Not Found

[Section titled “Model Not Found”](#model-not-found)

```bash

$ karma info model "nonexistent-model"

Error: Model 'nonexistent-model' not found in registry

Available models: Qwen/Qwen3-0.6B, google/medgemma-4b-it, ...

```

### Dataset Not Found

[Section titled “Dataset Not Found”](#dataset-not-found)

```bash

$ karma info dataset "nonexistent-dataset"

Error: Dataset 'nonexistent-dataset' not found in registry

Available datasets: openlifescienceai/pubmedqa, openlifescienceai/medmcqa, ...

```

### Invalid Cache Path

[Section titled “Invalid Cache Path”](#invalid-cache-path)

```bash

$ karma info system --cache-path /invalid/path/cache.db

Cache Status: Path not accessible

```

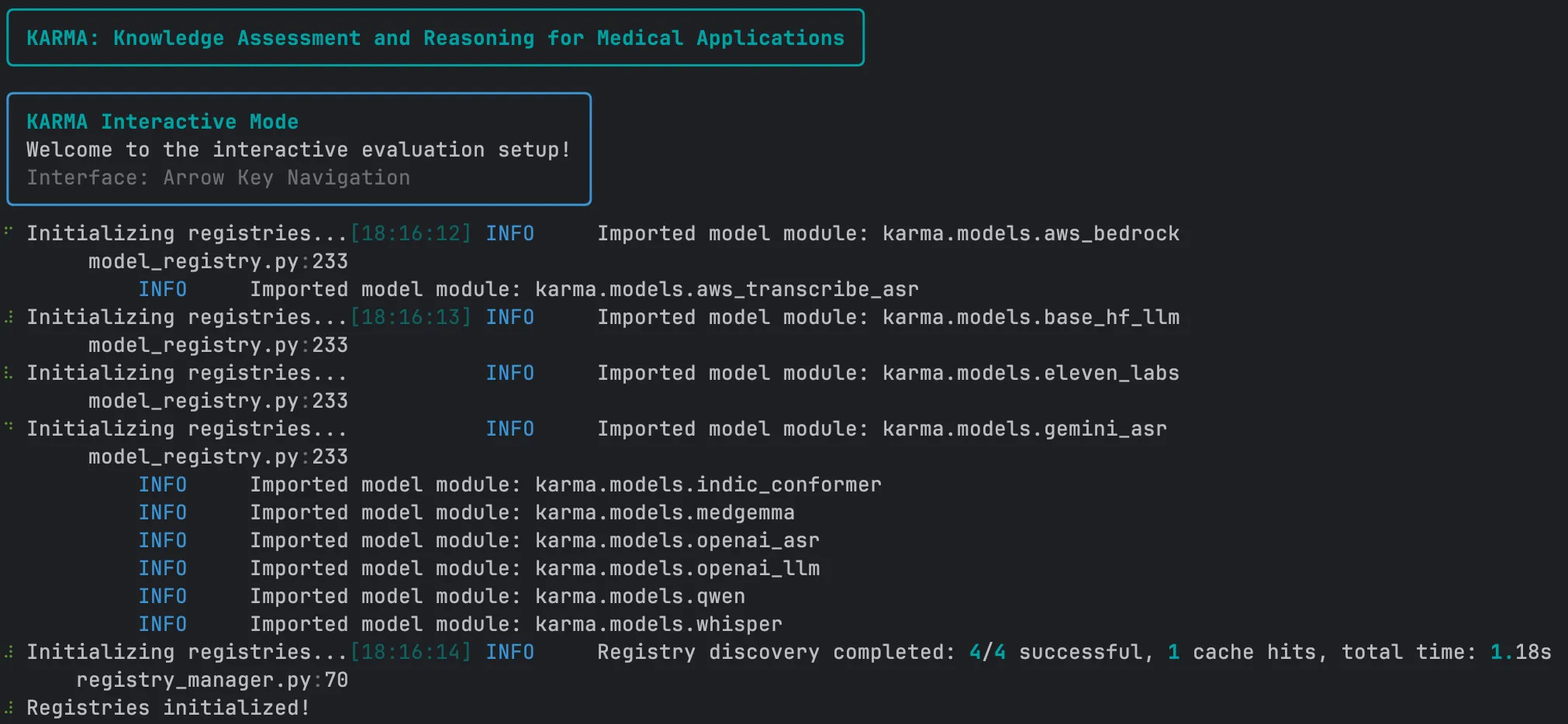

# karma interactive

KARMA’s **Interactive Mode** provides a terminal-based experience for benchmarking language and speech models.

This mode walks you through choosing a model, configuring arguments, selecting datasets, reviewing a summary, and executing the evaluations.

***

## 1. Launch Interactive Mode

[Section titled “1. Launch Interactive Mode”](#1-launch-interactive-mode)

Open your terminal in the root folder of your KARMA project and run:

```python

karma interactive

```

This starts the interactive workflow.

You will see a welcome screen indicating that the system is ready.

***

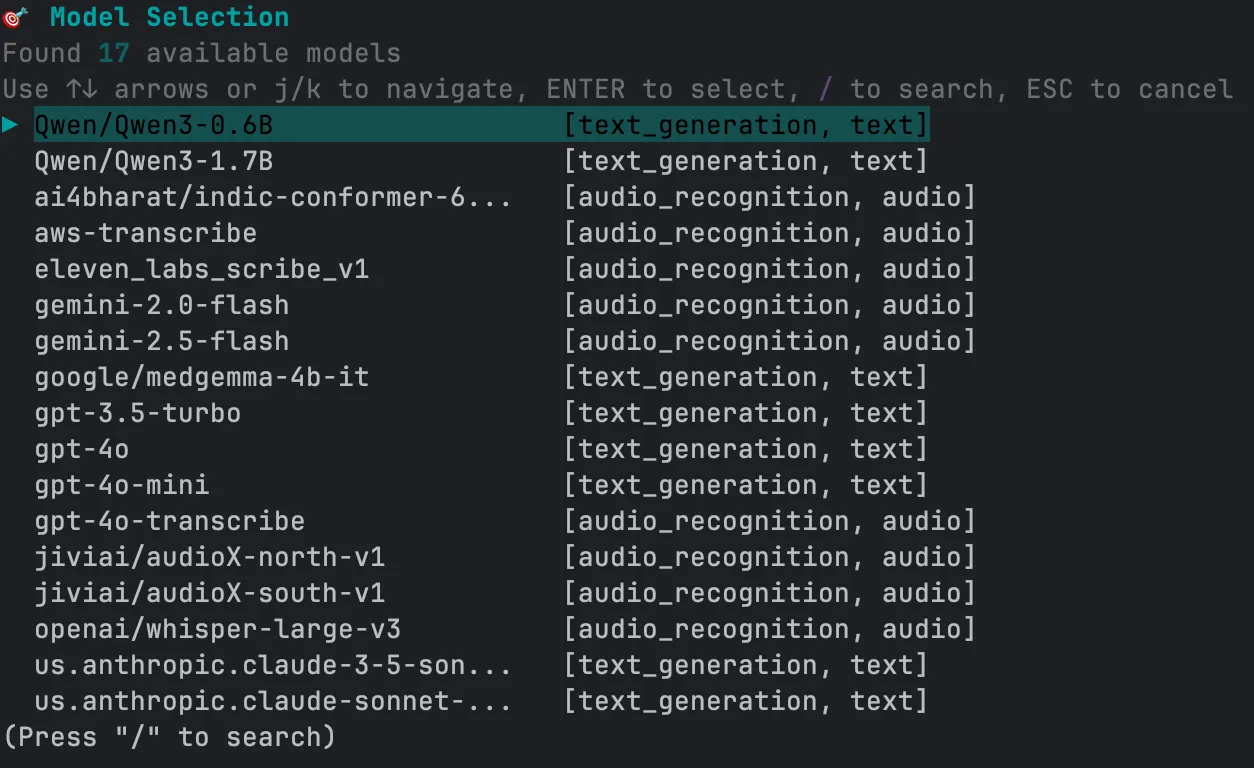

## 2. Choose a Model

[Section titled “2. Choose a Model”](#2-choose-a-model)

Next, you’ll get a list of available models.

Use the arrow keys to scroll through and hit Enter to select the one you want.

***

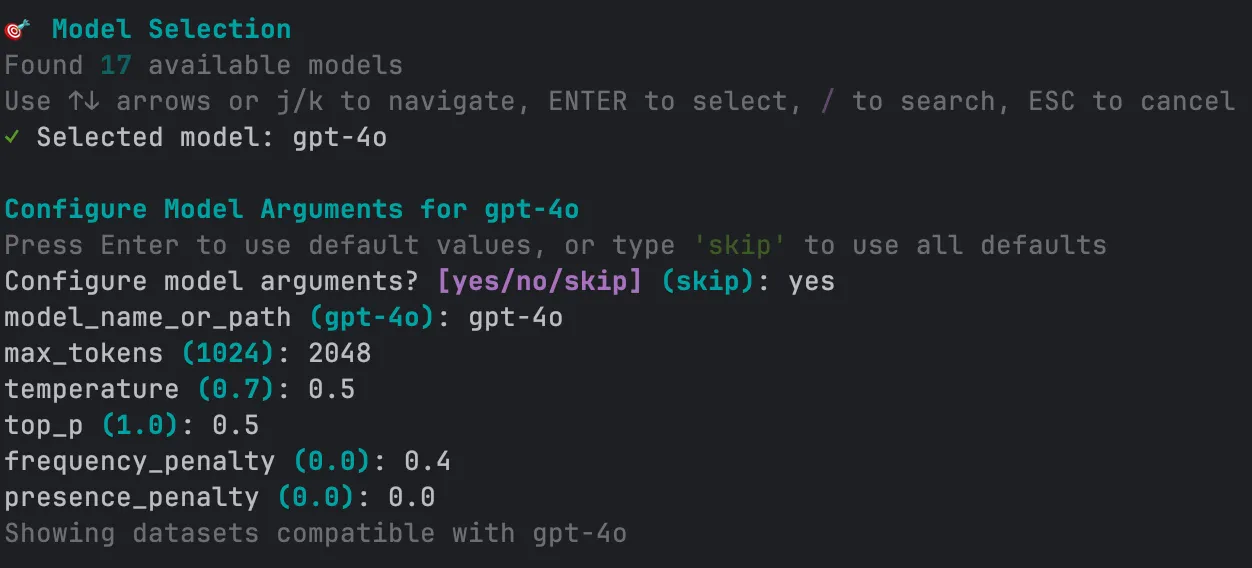

## 3. Configure Model Arguments (Optional)

[Section titled “3. Configure Model Arguments (Optional)”](#3-configure-model-arguments-optional)

Some models let you tweak parameters like `temperature` or `max_tokens`. If that’s the case, you’ll be prompted to either:

* Enter your own values

* Or press Enter to skip

***

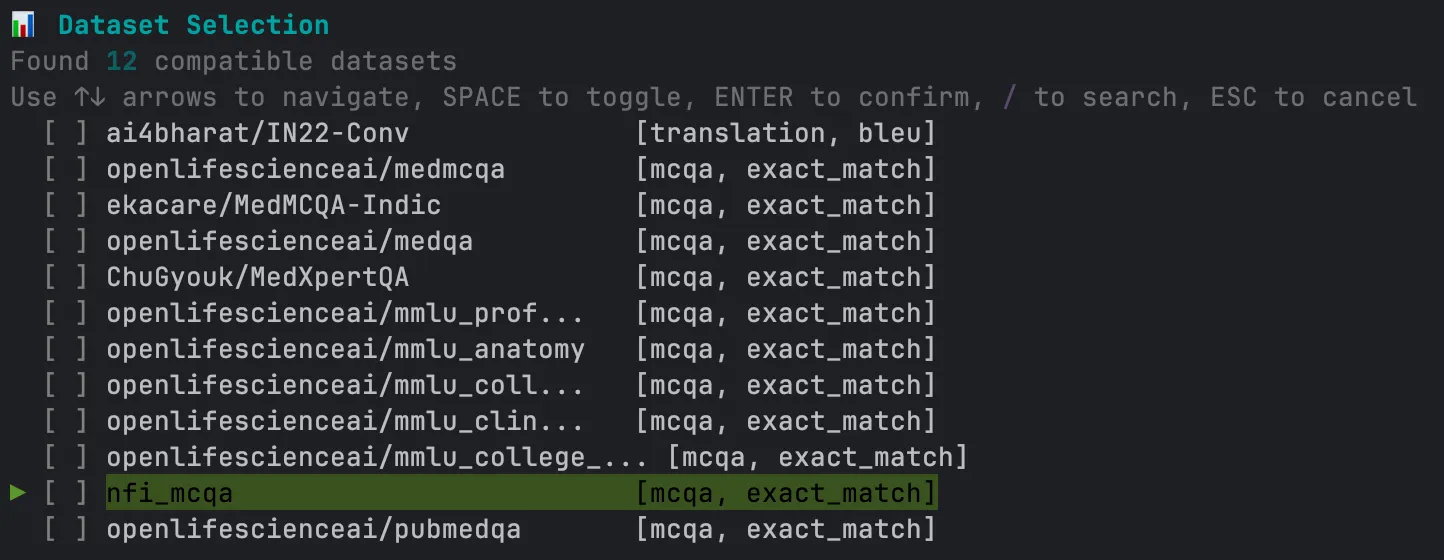

## 4. Select a Dataset

[Section titled “4. Select a Dataset”](#4-select-a-dataset)

Choose datasets against which you want to evaluate the model.

* Press `Space` to select one or more datasets

* Hit `Enter` to confirm your selection

* Use the `/` to search for specific datasets

***

## 5. Review Configuration Summary

[Section titled “5. Review Configuration Summary”](#5-review-configuration-summary)

Before continuing, you’ll be shown an **overall summary** of the configuration:

* Selected model and its arguments

* Chosen dataset(s)

Make sure everything looks right before continuing.

***

## 6. Save and Execute Evaluation

[Section titled “6. Save and Execute Evaluation”](#6-save-and-execute-evaluation)

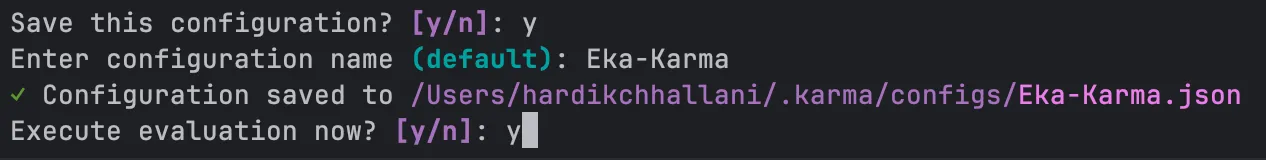

You’ll be asked if you want to:

* Save this configuration for later

* Run the evaluation now or later

Choose whatever works best for your workflow\..

***

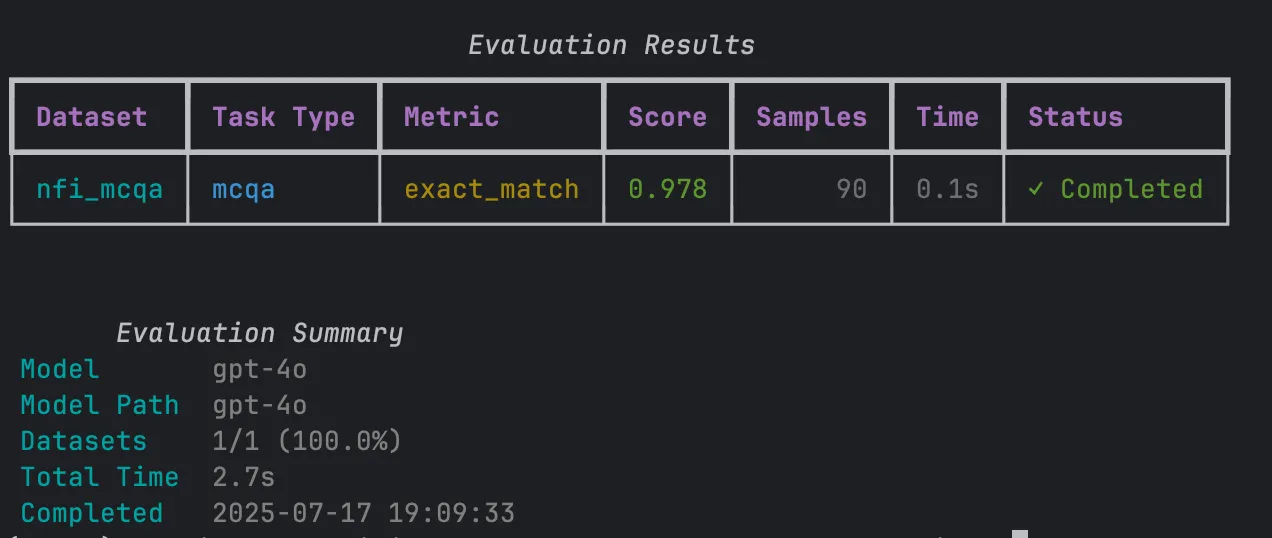

## 7. View Results

[Section titled “7. View Results”](#7-view-results)

Once the evaluation begins, you’ll see real-time progress in your terminal.

When it’s finished, the results will be displayed right away for you to review.

***

# karma list

> Complete reference for the karma list commands

The `karma list` command group provides discovery and listing functionality for all KARMA resources.

## Usage

[Section titled “Usage”](#usage)

```bash

karma list [COMMAND] [OPTIONS]

```

## Subcommands

[Section titled “Subcommands”](#subcommands)

* `karma list models` - List all available models

* `karma list datasets` - List all available datasets

* `karma list metrics` - List all available metrics

* `karma list all` - List all resources (models, datasets, and metrics)

***

## karma list models

[Section titled “karma list models”](#karma-list-models)

List all available models in the registry.

### Usage

[Section titled “Usage”](#usage-1)

```bash

karma list models [OPTIONS]

```

### Options

[Section titled “Options”](#options)

| Option | Type | Default | Description |

| ---------- | ------------------ | ------- | ------------- |

| `--format` | table\|simple\|csv | table | Output format |

### Examples

[Section titled “Examples”](#examples)

```bash

# Table format (default)

karma list models

# Simple text format

karma list models --format simple

# CSV format

karma list models --format csv

```

### Output

[Section titled “Output”](#output)

The table format shows:

* Model Name

* Status (Available/Unavailable)

* Modality (Text, Audio, Vision, etc.)

***

## karma list datasets

[Section titled “karma list datasets”](#karma-list-datasets)

List all available datasets in the registry with optional filtering.

### Usage

[Section titled “Usage”](#usage-2)

```bash

karma list datasets [OPTIONS]

```

### Options

[Section titled “Options”](#options-1)

| Option | Type | Default | Description |

| ------------------ | ------------------ | ------- | -------------------------------------------------------- |

| `--task-type TEXT` | TEXT | - | Filter by task type (e.g., ‘mcqa’, ‘vqa’, ‘translation’) |

| `--metric TEXT` | TEXT | - | Filter by supported metric (e.g., ‘accuracy’, ‘bleu’) |

| `--format` | table\|simple\|csv | table | Output format |

| `--show-args` | FLAG | false | Show detailed argument information |

### Examples

[Section titled “Examples”](#examples-1)

```bash

# List all datasets

karma list datasets

# Filter by task type

karma list datasets --task-type mcqa

# Filter by metric

karma list datasets --metric bleu

# Show detailed argument information

karma list datasets --show-args

# Multiple filters

karma list datasets --task-type translation --metric bleu

# CSV output

karma list datasets --format csv

```

### Output

[Section titled “Output”](#output-1)

The table format shows:

* Dataset Name

* Task Type

* Metrics

* Required Args

* Processors

* Split

* Commit Hash

With `--show-args`, additional details are shown:

* Required arguments with examples

* Optional arguments with defaults

* Processor information

* Usage examples

***

## karma list metrics

[Section titled “karma list metrics”](#karma-list-metrics)

List all available metrics in the registry.

### Usage

[Section titled “Usage”](#usage-3)

```bash

karma list metrics [OPTIONS]

```

### Options

[Section titled “Options”](#options-2)

| Option | Type | Default | Description |

| ---------- | ------------------ | ------- | ------------- |

| `--format` | table\|simple\|csv | table | Output format |

### Examples

[Section titled “Examples”](#examples-2)

```bash

# Table format (default)

karma list metrics

# Simple text format

karma list metrics --format simple

# CSV format

karma list metrics --format csv

```

### Output

[Section titled “Output”](#output-2)

Shows all registered metrics including:

* KARMA native metrics

* HuggingFace Evaluate metrics (as fallback)

***

## karma list all

[Section titled “karma list all”](#karma-list-all)

List both models, datasets, and metrics in one command.

### Usage

[Section titled “Usage”](#usage-4)

```bash

karma list all [OPTIONS]

```

### Options

[Section titled “Options”](#options-3)

| Option | Type | Default | Description |

| ---------- | ------------- | ------- | --------------------------------- |

| `--format` | table\|simple | table | Output format (CSV not supported) |

### Examples

[Section titled “Examples”](#examples-3)

```bash

# Show all resources

karma list all

# Simple format

karma list all --format simple

```

### Output

[Section titled “Output”](#output-3)

Displays:

1. **MODELS** section with all available models

2. **DATASETS** section with all available datasets

3. **METRICS** section with all available metrics

## Common Usage Patterns

[Section titled “Common Usage Patterns”](#common-usage-patterns)

### Discovery Workflow

[Section titled “Discovery Workflow”](#discovery-workflow)

```bash

# 1. See what models are available

karma list models

# 2. See what datasets work with medical tasks

karma list datasets --task-type mcqa

# 3. Check what metrics are available

karma list metrics

# 4. Get detailed info about a specific dataset

karma info dataset openlifescienceai/pubmedqa

```

### Integration Workflow

[Section titled “Integration Workflow”](#integration-workflow)

```bash

# Export for scripts

karma list models --format csv > models.csv

karma list datasets --format csv > datasets.csv

# Check compatibility

karma list datasets --metric exact_match

```

### Development Workflow

[Section titled “Development Workflow”](#development-workflow)

```bash

# Quick overview

karma list all

# Detailed dataset analysis

karma list datasets --show-args --format table

```

## Output Formats

[Section titled “Output Formats”](#output-formats)

### Table Format

[Section titled “Table Format”](#table-format)

* Rich formatted tables with colors and styling

* Best for interactive use

* Default format

### Simple Format

[Section titled “Simple Format”](#simple-format)

* Plain text, one item per line

* Good for scripting and piping

* Minimal formatting

### CSV Format

[Section titled “CSV Format”](#csv-format)

* Comma-separated values

* Best for data processing and exports

* Machine-readable format

## See Also

[Section titled “See Also”](#see-also)

* [Info Commands](./info.md) - Get detailed information about specific resources

* [CLI Basics](../user-guide/cli-basics.md) - General CLI usage

* [Supported Resources](../supported-resources.md) - Complete resource listing

# Supported Resources

> **Note**: This page is auto-generated during the CI/CD pipeline. Last updated: 2025-07-25 10:57:32 UTC

The following resources are currently supported by KARMA:

## Datasets

[Section titled “Datasets”](#datasets)

Currently supported datasets (20 total):

| Dataset | Task Type | Metrics | Required Args | Processors | Split |

| -------------------------------------------------- | ------------------ | ------------------------------- | ---------------------------------- | ----------------------------- | ---------- |

| ChuGyouk/MedXpertQA | mcqa | exact\_match | — | — | test |

| Tonic/Health-Bench-Eval-OSS-2025-07 | rubric\_evaluation | rubric\_evaluation | — | — | oss\_eval |

| ai4bharat/IN22-Conv | translation | bleu | source\_language, target\_language | devnagari\_transliterator | test |

| ai4bharat/IndicVoices | transcription | wer, cer, asr\_semantic\_metric | language | multilingual\_text\_processor | valid |

| ekacare/MedMCQA-Indic | mcqa | exact\_match | subset | — | test |

| ekacare/clinical\_note\_generation\_dataset | text\_to\_json | json\_rubric\_evaluation | — | — | test |

| ekacare/eka-medical-asr-evaluation-dataset | transcription | wer, cer, asr\_semantic\_metric | language | multilingual\_text\_processor | test |

| ekacare/ekacare\_medical\_history\_summarisation | rubric\_evaluation | rubric\_evaluation | — | — | test |

| ekacare/medical\_records\_parsing\_validation\_set | image\_to\_json | json\_rubric\_evaluation | — | — | test |

| ekacare/vistaar\_small\_asr\_eval | transcription | wer, cer, asr\_semantic\_metric | language | multilingual\_text\_processor | test |

| flaviagiammarino/vqa-rad | vqa | exact\_match, tokenised\_f1 | — | — | test |

| mdwiratathya/SLAKE-vqa-english | vqa | exact\_match, tokenised\_f1 | — | — | test |

| openlifescienceai/medmcqa | mcqa | exact\_match | — | — | validation |

| openlifescienceai/medqa | mcqa | exact\_match | — | — | test |

| openlifescienceai/mmlu\_anatomy | mcqa | exact\_match | — | — | test |

| openlifescienceai/mmlu\_clinical\_knowledge | mcqa | exact\_match | — | — | test |

| openlifescienceai/mmlu\_college\_biology | mcqa | exact\_match | — | — | test |

| openlifescienceai/mmlu\_college\_medicine | mcqa | exact\_match | — | — | test |

| openlifescienceai/mmlu\_professional\_medicine | mcqa | exact\_match | — | — | test |

| openlifescienceai/pubmedqa | mcqa | exact\_match | — | — | test |

Recreate this through

```plaintext

karma list datasets

```

## Models

[Section titled “Models”](#models)

Currently supported models (17 total):

| Model Name |

| -------------------------------------------- |

| Qwen/Qwen3-0.6B |

| Qwen/Qwen3-1.7B |

| aws-transcribe |

| docassistchat/default |

| ekacare/parrotlet-v-lite-4b |

| gemini-2.0-flash |

| gemini-2.5-flash |

| google/medgemma-4b-it |

| gpt-3.5-turbo |

| gpt-4.1 |

| gpt-4o |

| gpt-4o-mini |

| gpt-4o-transcribe |

| o3 |

| us.anthropic.claude-3-5-sonnet-20240620-v1:0 |

| us.anthropic.claude-3-5-sonnet-20241022-v2:0 |

| us.anthropic.claude-sonnet-4-20250514-v1:0 |

Recreate this through

```plaintext

karma list models

```

## Metrics

[Section titled “Metrics”](#metrics)

Currently supported metrics (8 total):

| Metric Name |

| ------------------------ |

| bleu |

| cer |

| exact\_match |

| f1 |

| json\_rubric\_evaluation |

| rubric\_evaluation |

| tokenised\_f1 |

| wer |

Recreate this through

```plaintext

karma list metrics

```

## Quick Reference

[Section titled “Quick Reference”](#quick-reference)

Use the following commands to explore available resources:

```bash

# List all models

karma list models

# List all datasets

karma list datasets

# List all metrics

karma list metrics

# List all processors

karma list processors

# Get detailed information about a specific resource

karma info model "Qwen/Qwen3-0.6B"

karma info dataset "openlifescienceai/pubmedqa"

```

## Adding New Resources

[Section titled “Adding New Resources”](#adding-new-resources)

To add new models, datasets, or metrics to KARMA:

* See [Adding Models](/user-guide/add-your-own/add-model.md)

* See [Adding Datasets](/user-guide/add-your-own/add-dataset.md)

* See [Metrics Overview](/user-guide/metrics/metrics_overview.md)

For more detailed information about the registry system, see the [Registry Documentation](/user-guide/registry/registries.md).

# Add dataset

You can create custom datasets by inheriting from `BaseMultimodalDataset` and implementing the `format_item` method to return a properly formatted `DataLoaderIterable`:

```python

from karma.eval_datasets.base_dataset import BaseMultimodalDataset

from karma.registries.dataset_registry import register_dataset

from karma.data_models.dataloader_iterable import DataLoaderIterable

```

Here we will use the `register_dataset` decorator to register and make the dataset discoverable to the CLI. This decorator also has information about the metric to use and any arguments that can be configured.

```python

@register_dataset(

"my_medical_dataset",

metrics=["exact_match", "accuracy"],

task_type="mcqa",

required_args=["split"],

optional_args=["subset"],

default_args={"split": "test"}

)

class MyMedicalDataset(BaseMultimodalDataset):

"""Custom medical dataset."""

def __init__(self, split: str = "test", **kwargs):

self.split = split

super().__init__(**kwargs)

def load_data(self):

# Load your dataset

return your_dataset_loader(split=self.split)

def format_item(self, item):

"""Format each item into DataLoaderIterable format."""

# Example for text-based dataset

return DataLoaderIterable(

input=f"Question: {item['question']}\nChoices: {item['choices']}",

expected_output=item['answer'],

other_args={"question_id": item['id']}

)

```

In the class, we implement the `format_item` method to specify how the output will be like through the `DataLoaderIterable` See (`DataLoaderIterable`)\[user-guide/datasets/data-loader-iterable] for more information.

## Multi-Modal Dataset Example

[Section titled “Multi-Modal Dataset Example”](#multi-modal-dataset-example)

For datasets that combine multiple modalities:

```plaintext

def format_item(self, item):

"""Format multi-modal item."""

return DataLoaderIterable(

input=f"Question: {item['question']}",

images=[item['image_bytes']], # List of image bytes

audio=item.get('audio_bytes'), # Optional audio

expected_output=item['answer'],

other_args={

"question_type": item['type'],

"difficulty": item['difficulty']

}

)

```

## Conversation Dataset Example

[Section titled “Conversation Dataset Example”](#conversation-dataset-example)

For datasets with multi-turn conversations:

```python

from karma.data_models.dataloader_iterable import Conversation, ConversationTurn

def format_item(self, item):

"""Format conversation item."""

conversation_turns = []

for turn in item['conversation']:

conversation_turns.append(

ConversationTurn(

content=turn['content'],

role=turn['role'] # 'user' or 'assistant'

)

)

return DataLoaderIterable(

conversation=Conversation(conversation_turns=conversation_turns),

system_prompt=item.get('system_prompt', ''),

expected_output=item['expected_response']

)

```

The `DataLoaderIterable` format ensures that all datasets work seamlessly with any model type, whether it’s text-only, multi-modal, or conversation-based. Models receive the appropriate data fields and can process them according to their capabilities.

## Using Local Datasets with KARMA

[Section titled “Using Local Datasets with KARMA”](#using-local-datasets-with-karma)

This guide will walk you through how to plug that dataset into KARMA’s evaluation pipeline. Let’s say we are trying to integrate an MCQA dataset.

1. Organize Your Dataset Ensure your dataset is structured correctly.\

Each row should ideally include:

* A question

* A list of options (optional)

* The correct answer

* Optionally: metadata like category, generic name, or citation

2. Set Up a Custom Dataset Class KARMA supports registering your own datasets using a decorator.

```python

@register_dataset(

dataset_name="mcqa-local",

split="test",

metrics=["exact_match"],

task_type="mcqa",

)

class LocalDataset(BaseMultimodalDataset):

...

```

This decorator registers your dataset with KARMA for evaluations.

3. Load your Dataset In your Dataset class, load your dataset file.\

You can use any format supported by pandas, such as CSV or Parquet.

```python

def __init__(self, ...):

self.data_path =

if not os.path.exists(self.data_path):

raise FileNotFoundError(f"Dataset file not found: {self.data_path}")

self.df = pd.read_parquet(self.data_path)

...

```

4. Implement the format\_item Method Each row in your dataset will be converted into an input-output pair for the model.

```python

def format_item(self, sample: Dict[str, Any]) -> DataLoaderIterable:

input_text = self._format_question(sample["data"])

correct_answer = sample["data"]["ground_truth"]

prompt = self.confinement_instructions.replace("", input_text)

dataloader_item = DataLoaderIterable(

input=prompt, expected_output=correct_answer

)

dataloader_item.conversation = None

return dataloader_item

```

5. Iterate Over the Dataset Implement `__iter__()` to yield formatted examples.

```python

def __iter__(self) -> Generator[Dict[str, Any], None, None]:

if self.dataset is None:

self.dataset = list(self.load_eval_dataset())

for idx, sample in enumerate(self.dataset):

if self.max_samples is not None and idx >= self.max_samples:

break

item = self.format_item(sample)

yield item

```

6. Handle Model Output Extract the model’s predictions.

```python

def extract_prediction(self, response: str) -> Tuple[str, bool]:

answer, success = "", False

if "Final Answer:" in response:

answer = response.split("Final Answer:")[1].strip()

if answer.startswith("(") and answer.endswith(")"):

answer = answer[1:-1]

success = True

return answer, success

```

7. Yield Examples for Evaluation Read from your DataFrame and return structured examples.

```python

def load_eval_dataset(self, ...):

for _, row in self.df.iterrows():

prediction = None

parsed_output = row.get("model_output_parsed", None)

if isinstance(parsed_output, dict):

prediction = parsed_output.get("prediction", None)

yield {

"id": row["index"],

"data": {

"question": row["question"],

"options": row["options"],

"ground_truth": row["ground_truth"],

},

"prediction": prediction,

"metadata": {

"generic_name": row.get("generic_name", None),

"category": row.get("category", None),

"citation": row.get("citation", None),

},

}

```

# Add metric

You can create custom evaluation metrics by inheriting from `BaseMetric`:

```python

from karma.metrics.base_metric_abs import BaseMetric

from karma.registries.metrics_registry import register_metric

@register_metric("medical_accuracy")

class MedicalAccuracyMetric(BaseMetric):

"""Medical-specific accuracy metric with domain weighting."""

def __init__(self, medical_term_weight=1.5):

self.medical_term_weight = medical_term_weight

self.medical_terms = self._load_medical_terms()

def evaluate(self, predictions, references, **kwargs):

"""Evaluate with medical term weighting."""

total_score = 0

total_weight = 0

for pred, ref in zip(predictions, references):

# Standard comparison

is_correct = pred.lower().strip() == ref.lower().strip()

# Apply weighting for medical terms

weight = self._get_weight(ref)

total_weight += weight

if is_correct:

total_score += weight

accuracy = total_score / total_weight if total_weight > 0 else 0.0

return {

"medical_accuracy": accuracy,

"total_examples": len(predictions),

"total_weight": total_weight

}

def _get_weight(self, text):

"""Get weight based on medical content."""

weight = 1.0

for term in self.medical_terms:

if term in text.lower():

weight = self.medical_term_weight

break

return weight

def _load_medical_terms(self):

"""Load medical terminology."""

return ["diabetes", "hypertension", "surgery", "medication",

"diagnosis", "treatment", "symptom", "therapy"]

```

### Using Custom Metrics

[Section titled “Using Custom Metrics”](#using-custom-metrics)

Once registered, custom metrics are automatically discovered and need to be specified on the dataset that you want to use.

Let’s say you would like to change the openlifescienceai/pubmedqa Update the @register\_dataset in `eval_datasets/pubmedqa.py`

```python

@register_dataset(

DATASET_NAME,

commit_hash=COMMIT_HASH,

split=SPLIT,

metrics=["exact_match", "medical_accuracy"], # we added the medical accuracy metric to this dataset

task_type="mcqa",

)

class PubMedMCQADataset(MedQADataset):

...

```

```bash

# The metric will be automatically used if specified in dataset registration

karma eval --model qwen --model-path "Qwen/Qwen3-0.6B" \

--datasets my_medical_dataset

```

# Add model

This guide provides a walkthrough for adding new models to the KARMA evaluation framework. KARMA supports diverse model types including local HuggingFace models, API-based services, and multi-modal models across text, audio, image, and video domains.

## Architecture Overview

[Section titled “Architecture Overview”](#architecture-overview)

### Base Model System

[Section titled “Base Model System”](#base-model-system)

All models in KARMA inherit from the `BaseModel` abstract class, which provides a unified interface for model loading, inference, and data processing. This ensures consistency across all model implementations and makes it easy to swap between different models during evaluation.

#### Required Method Implementation

[Section titled “Required Method Implementation”](#required-method-implementation)

Every custom model must implement these four core methods:

```python

from karma.models.base_model_abs import BaseModel

from karma.data_models.dataloader_iterable import DataLoaderIterable

```

**1. Basic Class Structure**

```python

class MyModel(BaseModel):

def load_model(self):

"""Initialize model and tokenizer/processor

This method is called once when the model is first used.

Load your model weights, tokenizer, and any required components here.

Set self.is_loaded = True when complete.

"""

pass

```

**2. Main Inference Method**

```python

def run(self, inputs: List[DataLoaderIterable]) -> List[str]:

"""Main inference method that processes a batch of inputs

This is the primary method called during evaluation.

It should handle the complete inference pipeline:

1. Check if model is loaded (call load_model if needed)

2. Preprocess inputs

3. Run model inference

4. Postprocess outputs

5. Return list of string predictions

"""

pass

```

**3. Input Preprocessing**

```python

def preprocess(self, inputs: List[DataLoaderIterable]) -> Any:

"""Convert raw inputs to model-ready format

Transform the DataLoaderIterable objects into the format

your model expects (e.g., tokenized tensors, processed images).

Handle batching, padding, and any required data transformations.

"""

pass

```

**4. Output Postprocessing**

```python

def postprocess(self, outputs: Any) -> List[str]:

"""Process model outputs to final format

Convert raw model outputs (logits, tokens, etc.) into

clean string responses that can be evaluated.

Apply any filtering, decoding, or formatting needed.

"""

pass

```

### ModelMeta System

[Section titled “ModelMeta System”](#modelmeta-system)

The `ModelMeta` class provides comprehensive metadata management for model registration. This system allows KARMA to understand your model’s capabilities, requirements, and how to instantiate it properly.

#### Understanding ModelMeta Components

[Section titled “Understanding ModelMeta Components”](#understanding-modelmeta-components)

**Import Required Classes**

```python

from karma.data_models.model_meta import ModelMeta, ModelType, ModalityType

```

**Basic ModelMeta Structure**

```python

model_meta = ModelMeta(

# Model identification - use format "organization/model-name"

name="my-model/my-model-name",

# Human-readable description for documentation

description="Description of my model",

# Python import path to your model class

loader_class="karma.models.my_model.MyModel",

)

```

**Configuration Parameters**

```python

# Parameters passed to your model's __init__ method

loader_kwargs={

"temperature": 0.7, # Generation temperature

"max_tokens": 2048, # Maximum output length

# Add any custom parameters your model needs

},

```

**Model Classification**

```python

# What type of task this model performs

model_type=ModelType.TEXT_GENERATION, # or AUDIO_RECOGNITION, MULTIMODAL, etc.

# What input types the model can handle

modalities=[ModalityType.TEXT], # TEXT, IMAGE, AUDIO, VIDEO

# What frameworks/libraries the model uses

framework=["PyTorch", "Transformers"],

```

### Data Flow

[Section titled “Data Flow”](#data-flow)

Models process data through the `DataLoaderIterable` structure. This standardized format ensures that all models receive data in a consistent way, regardless of the underlying dataset format.

#### Understanding DataLoaderIterable

[Section titled “Understanding DataLoaderIterable”](#understanding-dataloaderiterable)

The system automatically converts dataset entries into this structure before passing them to your model:

```python

from karma.data_models.dataloader_iterable import DataLoaderIterable

```

**Core Data Fields**

```python

data = DataLoaderIterable(

# Primary text input (questions, prompts, etc.)

input="Your text input here",

# System-level instructions for the model

system_prompt="System instructions",

# Ground truth answer (used for evaluation, not model input)

expected_output="Ground truth for evaluation",

)

```

**Multi-Modal Data Fields**

```python

# Image data as PIL Images or raw bytes

images=None, # List of PIL.Image or bytes objects

# Audio data in various formats

audio=None, # Audio file path, bytes, or numpy array

# Video data (for video-capable models)

video=None, # Video file path or processed frames

```

**Conversation Support**

```python

# Multi-turn conversation history

conversation=None, # List of {"role": "user/assistant", "content": "..."}}

```

**Custom Extensions**

```python

# Additional dataset-specific information

other_args={"custom_key": "custom_value"} # Any extra metadata

```

#### How Your Model Receives Data

[Section titled “How Your Model Receives Data”](#how-your-model-receives-data)

Your model’s `run()` method receives a list of these objects:

```python

def run(self, inputs: List[DataLoaderIterable]) -> List[str]:

for item in inputs:

text_input = item.input # Main question/prompt

system_msg = item.system_prompt # System instructions

images = item.images # Any associated images

# Process each item...

```

## Model Implementation Steps

[Section titled “Model Implementation Steps”](#model-implementation-steps)

### Step 1: Create Model Class

[Section titled “Step 1: Create Model Class”](#step-1-create-model-class)

Create a new Python file in the `karma/models/` directory:

karma/models/my\_model.py

```python

import torch

from typing import List, Dict, Any

from karma.models.base_model_abs import BaseModel

from karma.data_models.dataloader_iterable import DataLoaderIterable

class MyModel(BaseModel):

def __init__(self, model_name_or_path: str, **kwargs):

super().__init__(model_name_or_path, **kwargs)

self.temperature = kwargs.get("temperature", 0.7)

self.max_tokens = kwargs.get("max_tokens", 2048)

def load_model(self):

"""Load the model and tokenizer"""

# Example for HuggingFace model

from transformers import AutoModelForCausalLM, AutoTokenizer

self.model = AutoModelForCausalLM.from_pretrained(

self.model_name_or_path,

device_map=self.device,

torch_dtype=torch.bfloat16,

trust_remote_code=True

)

self.tokenizer = AutoTokenizer.from_pretrained(

self.model_name_or_path,

trust_remote_code=True

)

self.is_loaded = True

def preprocess(self, inputs: List[DataLoaderIterable]) -> Dict[str, torch.Tensor]:

"""Convert inputs to model format"""

batch_inputs = []

for item in inputs:

# Handle different input types

if item.conversation:

# Multi-turn conversation

messages = item.conversation.messages

text = self.tokenizer.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

)

else:

# Single input

text = item.input

batch_inputs.append(text)

# Tokenize batch

encoding = self.tokenizer(

batch_inputs,

padding=True,

truncation=True,

return_tensors="pt",

max_length=self.max_tokens

)

return encoding.to(self.device)

def run(self, inputs: List[DataLoaderIterable]) -> List[str]:

"""Generate model outputs"""

if not self.is_loaded:

self.load_model()

# Preprocess inputs

model_inputs = self.preprocess(inputs)

# Generate outputs

with torch.no_grad():

outputs = self.model.generate(

**model_inputs,

max_new_tokens=self.max_tokens,

temperature=self.temperature,

do_sample=True,

pad_token_id=self.tokenizer.eos_token_id

)

# Decode outputs

generated_texts = []

for i, output in enumerate(outputs):

# Remove input tokens from output

input_length = model_inputs["input_ids"][i].shape[0]

generated_tokens = output[input_length:]

text = self.tokenizer.decode(

generated_tokens,

skip_special_tokens=True

)

generated_texts.append(text)

return self.postprocess(generated_texts)

def postprocess(self, outputs: List[str]) -> List[str]:

"""Clean up generated outputs"""

cleaned_outputs = []

for output in outputs:

# Remove any unwanted tokens or formatting

cleaned = output.strip()

cleaned_outputs.append(cleaned)

return cleaned_outputs

```

### Step 2: Create ModelMeta Configuration

[Section titled “Step 2: Create ModelMeta Configuration”](#step-2-create-modelmeta-configuration)

Add ModelMeta definitions at the end of your model file:

```python

# karma/models/my_model.py (continued)

from karma.registries.model_registry import register_model_meta

from karma.data_models.model_meta import ModelMeta, ModelType, ModalityType

# Define model variants

MyModelSmall = ModelMeta(

name="my-org/my-model-small",

description="Small version of my model",

loader_class="karma.models.my_model.MyModel",

loader_kwargs={

"temperature": 0.7,

"max_tokens": 2048,

},

model_type=ModelType.TEXT_GENERATION,

modalities=[ModalityType.TEXT],

framework=["PyTorch", "Transformers"],

n_parameters=7_000_000_000,

memory_usage_mb=14_000,

)

MyModelLarge = ModelMeta(

name="my-org/my-model-large",

description="Large version of my model",

loader_class="karma.models.my_model.MyModel",

loader_kwargs={

"temperature": 0.7,

"max_tokens": 4096,

},

model_type=ModelType.TEXT_GENERATION,

modalities=[ModalityType.TEXT],

framework=["PyTorch", "Transformers"],

n_parameters=70_000_000_000,

memory_usage_mb=140_000,

)

# Register models

register_model_meta(MyModelSmall)

register_model_meta(MyModelLarge)

```

### Step 3: Verify Registration

[Section titled “Step 3: Verify Registration”](#step-3-verify-registration)

Test that your model is properly registered:

```bash

# List all models to verify registration

karma list models

# Check specific model details

karma list models --name "my-org/my-model-small"

```

## Model Types and Examples

[Section titled “Model Types and Examples”](#model-types-and-examples)

### Text Generation Models

[Section titled “Text Generation Models”](#text-generation-models)

**HuggingFace Transformers Model:**

```python

class HuggingFaceTextModel(BaseModel):

def load_model(self):

from transformers import AutoModelForCausalLM, AutoTokenizer

self.model = AutoModelForCausalLM.from_pretrained(

self.model_name_or_path,

device_map=self.device,

torch_dtype=torch.bfloat16

)

self.tokenizer = AutoTokenizer.from_pretrained(self.model_name_or_path)

self.is_loaded = True

def run(self, inputs: List[DataLoaderIterable]) -> List[str]:

# Implementation similar to Step 1 example

pass

```

**API-Based Model:**

```python

class APITextModel(BaseModel):

def __init__(self, model_name_or_path: str, **kwargs):

super().__init__(model_name_or_path, **kwargs)

self.api_key = kwargs.get("api_key")

self.base_url = kwargs.get("base_url")

def load_model(self):

import openai

self.client = openai.OpenAI(

api_key=self.api_key,

base_url=self.base_url

)

self.is_loaded = True

def run(self, inputs: List[DataLoaderIterable]) -> List[str]:

if not self.is_loaded:

self.load_model()

responses = []

for item in inputs:

response = self.client.chat.completions.create(

model=self.model_name_or_path,

messages=[{"role": "user", "content": item.input}],

temperature=self.temperature,

max_tokens=self.max_tokens

)

responses.append(response.choices[0].message.content)

return responses

```

### Audio Recognition Models

[Section titled “Audio Recognition Models”](#audio-recognition-models)

```python

class AudioRecognitionModel(BaseModel):

def load_model(self):

import whisper

self.model = whisper.load_model(self.model_name_or_path)

self.is_loaded = True

def preprocess(self, inputs: List[DataLoaderIterable]) -> List[Any]:

audio_data = []

for item in inputs:

if item.audio:

audio_data.append(item.audio)

else:

raise ValueError("Audio data is required for audio recognition")

return audio_data

def run(self, inputs: List[DataLoaderIterable]) -> List[str]:

if not self.is_loaded:

self.load_model()

audio_data = self.preprocess(inputs)

transcriptions = []

for audio in audio_data:

result = self.model.transcribe(audio)

transcriptions.append(result["text"])

return transcriptions

```

### Multi-Modal Models

[Section titled “Multi-Modal Models”](#multi-modal-models)

```python

class MultiModalModel(BaseModel):

def load_model(self):

from transformers import AutoProcessor, AutoModelForVision2Seq

self.processor = AutoProcessor.from_pretrained(self.model_name_or_path)

self.model = AutoModelForVision2Seq.from_pretrained(

self.model_name_or_path,

device_map=self.device,

torch_dtype=torch.bfloat16

)

self.is_loaded = True

def preprocess(self, inputs: List[DataLoaderIterable]) -> Dict[str, torch.Tensor]:

batch_inputs = []

for item in inputs:

# Handle text + image inputs

if item.images and item.input:

batch_inputs.append({

"text": item.input,

"images": item.images

})

else:

raise ValueError("Both text and images are required")

# Process with multi-modal processor

processed = self.processor(

text=[item["text"] for item in batch_inputs],

images=[item["images"] for item in batch_inputs],

return_tensors="pt",

padding=True

)

return processed.to(self.device)

def run(self, inputs: List[DataLoaderIterable]) -> List[str]:

if not self.is_loaded:

self.load_model()

model_inputs = self.preprocess(inputs)

with torch.no_grad():

outputs = self.model.generate(

**model_inputs,

max_new_tokens=self.max_tokens,

temperature=self.temperature

)

# Decode outputs

generated_texts = self.processor.batch_decode(

outputs, skip_special_tokens=True

)

return generated_texts

```

### ModelMeta Examples for Different Types

[Section titled “ModelMeta Examples for Different Types”](#modelmeta-examples-for-different-types)

```python

# Text generation model

TextModelMeta = ModelMeta(

name="my-org/text-model",

loader_class="karma.models.my_model.HuggingFaceTextModel",

model_type=ModelType.TEXT_GENERATION,

modalities=[ModalityType.TEXT],

framework=["PyTorch", "Transformers"],

)

# Audio recognition model

AudioModelMeta = ModelMeta(

name="my-org/audio-model",

loader_class="karma.models.my_model.AudioRecognitionModel",

model_type=ModelType.AUDIO_RECOGNITION,

modalities=[ModalityType.AUDIO],

framework=["PyTorch", "Whisper"],

audio_sample_rate=16000,

supported_audio_formats=["wav", "mp3", "flac"],

)

# Multi-modal model

MultiModalMeta = ModelMeta(

name="my-org/multimodal-model",

loader_class="karma.models.my_model.MultiModalModel",

model_type=ModelType.MULTIMODAL,

modalities=[ModalityType.TEXT, ModalityType.IMAGE],

framework=["PyTorch", "Transformers"],

vision_encoder_dim=1024,

)

```

### Logging

[Section titled “Logging”](#logging)

```python

import logging

logger = logging.getLogger(__name__)

def load_model(self):

logger.info(f"Loading model: {self.model_name_or_path}")

# ... model loading code ...

logger.info("Model loaded successfully")

```

Your model is now ready to be integrated into the KARMA evaluation framework! The system will automatically discover and make it available through the CLI and evaluation pipelines.

# Add processor

Processors are used for tweak the output of the model and then running evaluation on that output. This is typically required in cases when normalizing text for different languages or dialects. We have implmemented these for ASR specific datasets but you can use it for any dataset.

### Step 1: Create Processor Class

[Section titled “Step 1: Create Processor Class”](#step-1-create-processor-class)

karma/processors/my\_custom\_processor.py

```python

from karma.processors.base import BaseProcessor

from karma.registries.processor_registry import register_processor

@register_processor("medical_text_normalizer")

class MedicalTextNormalizer(BaseProcessor):

"""Processor for normalizing medical text."""

def __init__(self, normalize_units=True, expand_abbreviations=True):

self.normalize_units = normalize_units

self.expand_abbreviations = expand_abbreviations

self.medical_abbreviations = {

"bp": "blood pressure",

"hr": "heart rate",

"temp": "temperature",

"mg": "milligrams",

"ml": "milliliters"

}

def process(self, text: str, **kwargs) -> str:

"""Process medical text with normalization."""

if self.expand_abbreviations:

text = self._expand_abbreviations(text)

if self.normalize_units:

text = self._normalize_units(text)

return text

def _expand_abbreviations(self, text: str) -> str:

"""Expand medical abbreviations."""

for abbrev, expansion in self.medical_abbreviations.items():

text = text.replace(abbrev, expansion)

return text

def _normalize_units(self, text: str) -> str:

"""Normalize medical units."""

# Add unit normalization logic

return text

```

### Step 2: Register and Use

[Section titled “Step 2: Register and Use”](#step-2-register-and-use)

```python

# Via CLI

karma eval --model qwen --model-path "Qwen/Qwen3-0.6B" \

--datasets my_medical_dataset \

--processor-args "my_medical_dataset.medical_text_normalizer:normalize_units=True"

# Programmatically

from karma.registries.processor_registry import get_processor

processor = get_processor("medical_text_normalizer", normalize_units=True)

```

## Integration Patterns

[Section titled “Integration Patterns”](#integration-patterns)

### Dataset Integration

[Section titled “Dataset Integration”](#dataset-integration)

Processors can be integrated directly with dataset registration:

```python

@register_dataset(

"my_medical_dataset",

processors=["general_text_processor", "medical_text_normalizer"],

processor_configs={

"general_text_processor": {"lowercase": True},

"medical_text_normalizer": {"normalize_units": True}

}

)

class MyMedicalDataset(BaseMultimodalDataset):

# Dataset implementation

pass

```

## Advanced Use Cases

[Section titled “Advanced Use Cases”](#advanced-use-cases)

### Chain Multiple Processors

[Section titled “Chain Multiple Processors”](#chain-multiple-processors)

```python

# Create processor chain

from karma.registries.processor_registry import get_processor

processors = [

get_processor("general_text_processor", lowercase=True),

get_processor("medical_text_normalizer", normalize_units=True),

get_processor("multilingual_text_processor", target_language="en")

]

# Apply chain to dataset

def process_chain(text: str) -> str:

for processor in processors:

text = processor.process(text)

return text

```

### Language-Specific Processing

[Section titled “Language-Specific Processing”](#language-specific-processing)

```python

# Language-specific processor selection

def get_language_processor(language: str):

if language in ["hi", "bn", "ta"]:

return get_processor("devnagari_transliterator")

else:

return get_processor("general_text_processor")

```